Table of Contents

- What Is Artificial Intelligence?

- What Is Speech Translation?

- History of AI Speech Translation

- The Past, Present, and Future of Artificial Speech Translation

- Key Takeaways

- Conclusion

- FAQs

Speech translation is not an entirely new phenomenon. It has existed in the world of digital innovation for quite some time. Technology enthusiasts have dreamt of perfecting automatic speech translation for a long time now. With the first-ever speech translation system dating back to the year 1991, AI-run speech translation systems are now increasingly being used in most of our smart devices.

Google’s Pixel earbuds have the Google Translate feature, which delivers a smooth and efficient voice translation via the smartphone app. Skype also has a speech translation-enabled system. People now want to converse with technology and systems that make real-time speech translations a possibility.

What Is Artificial Intelligence?

Artificial intelligence (AI) is a wide branch of computer science that is involved in the process of building machines and systems capable of performing human tasks, or tasks that require typical human intelligence. Siri, Alexa, smart assistants on devices, email spam filters, AI web content writing, Netflix’s watching recommendations, robo-advisors, are all examples of artificial intelligence put to use. AI is an interesting effort in simulating human intelligence in machines. The goals and outcomes of artificial intelligence have been subject to several debates and controversies.

What Is Speech Translation?

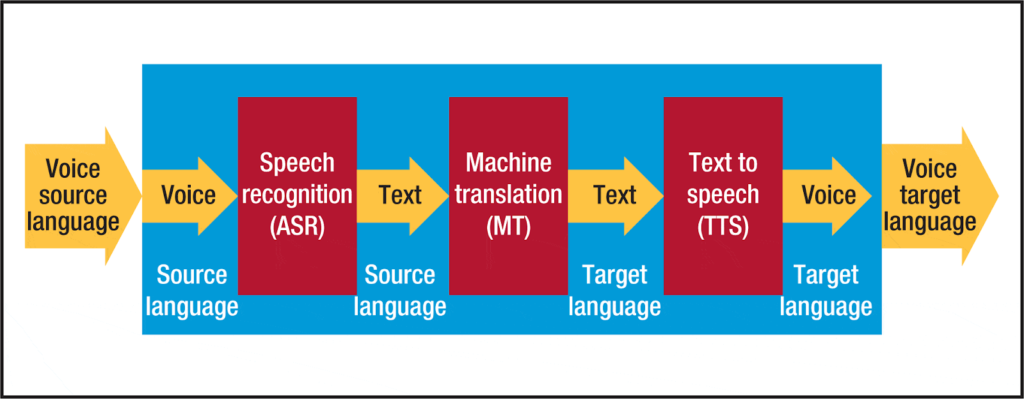

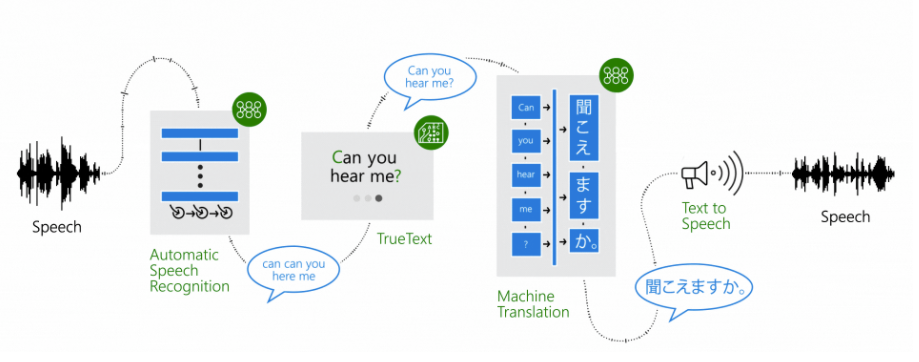

Speech translation is the process by which spoken phrases are quickly and effectively translated as well as spoken aloud in another language (the desired language). The speech translation technology facilitates seamless communication between the speakers of different languages. Speech translation systems incorporate the work of three main software systems: automatic speech recognition, voice synthesis, and machine translation (MT).

When you speak into a microphone, the speech recognition module recognizes what you have uttered. With the help of the machine translation module and speech synthesis system, it delivers an output.

History of AI Speech Translation

Automatic speech translation is a tempting niche of the digital realm. The origins of incorporating technology in the process of speech translation date back to the year 1947. Warren Weaver, the then-director of the Division of Natural Sciences at the Rockefeller Foundation, observed the results of machine decoding used during wartime.

He figured that there was some gap in cryptography that was leading to problems in accuracy during translation. While the first machine translation systems the world ever saw were government-funded initiatives and were used during the first world war, there was no actual computing market at that point in time, anywhere in the world.

Back then, machines were the size of a whole automobile, and the best translation they could do was rule-based. This deficiency in the function of decoding machines led to the birth of the idea that machines should be able to translate autonomously, using the rules of grammar and language.

In 1954, IBM 701 was created. It could translate about 49 sentences on chemistry from Russian to English. This was a great achievement and the first successful step in the field of creating non-numerical computer applications. This led to a lot of initial excitement, until loopholes like unsound syntax and grammatical errors were discovered in its usage.

Then, in 1976, (a prototype of) the Canadian METEO system was started. It could translate meteorological forecasts into French and English. It was primarily used by Environment Canada to translate forecasts in said languages.

In 1992, machine translation services went live for the first time, offering translations from English to German to its subscribers. In 1997, AtlaVista’s “Babel Fish” was born. The name was inspired by Douglas Adams’ popular novel The Hitchhiker’s Guide To Galaxy series. Babel Fish was capable of translating German, English, Dutch, French, Italian, and Spanish. And though this was a major leap in the world of early speech translation, Babel Fish was not reliable when it came to picking up the right translation for words with multiple meanings in a specific language. The translations it provided were also semantically challenged.

Inspired by Alan Turing’s (British scientist who tried to make a computer emulate human thought in the year) work, Kwabena Boahen, an electrical engineer, enhanced the concept of the neurogrid. This also shone some light on the fact that teaching computers human language was a task that could be achieved only if the computers successfully spoke the raw numerical language, fed through data.

The Past, Present, and Future of Artificial Speech Translation

While several attempts were earlier made to teach computers human language, modern speech translation systems like Google Translate are more practically designed, feeding sufficient data to a computer in the form of parallel texts for the languages it is required to translate.

This type of system is more self-learning. Computerized speech translations have sure come a long way, but they are not really as accurate. You can test-run them quickly for yourself by going to a website and copying the URL of any one of the stories from there. Then paste the URL into Google Translate. The output will barely bring out the context of the story.

The current MT systems largely rely on analysis, but natural languages all across the world are not really analytical in form. Context, intonation, reference to time and place: all of these factors lead to inconsistency in speech translation. Without cultural immersion, the accurate meaning of a translated word, sentence, or piece of text cannot be understood in its full capacity.

Even between languages that are all based on the Latin alphabet, there is constant shrinkage and expansion of the text. For languages that do not adhere to the Latin alphabet, or are read from the other side (as in Japanese tategaki and Arabic, which are read from right to left) speech translations are prone to be less and less accurate. But this is definitely not the end of innovation and technological development in the field of AI speech translation. It is, in fact, just the beginning.

Google is currently involved in integrating speech translation seamlessly into Android phones. Microsoft previewed a remarkable demo of Skype’s real-time speech translation feature in the year 2014, which can translate live video calls in as many as 45 languages. Google now also enables its users to provide suggestions for better and improved translations. Applications like Duolingo are a great effort towards helping people all around the world understand other languages better, and use the power of speech translation to nail correct pronunciation.

Key Takeaways

- Artificial Intelligence is a wide branch of computer science that is involved in the process of building machines and systems capable of performing human tasks, or tasks that require typical human intelligence.

- The origins of incorporating technology in the process of speech translation, date back to the year 1947.

- While several attempts were earlier made to teach computers human language, modern speech translation systems like Google Translate are more practically designed.

- Context, intonation, and reference to time and place are all factors that lead to inconsistency in speech translation.

- For languages that do not adhere to the Latin alphabet, or are read from the other side, speech translations are prone to be less and less accurate.

- Applications like Duolingo are a great effort towards helping people all around the world, understand other languages better, and use the power of speech translation to nail correct pronunciation.

Conclusion

Artificial speech translation is sure to become more enhanced in the coming years. Whether it will perfect the task of accurate and flawless transitions cannot be yet agreed upon. But there is one thing for sure: advancements in the field of speech translation will help people overcome language barriers they face when they migrate to a new place for work, education, and more.

FAQs

The process of speech recognition that recognizes a spoken language and translates it into text is known as speech-to-text translation. This is done using computational linguistics.

Artificial intelligence can be broadly categorized into three types: narrow or weak AI, general or strong AI, and artificial super intelligence.

Artificial intelligence is highly useful in today’s world. It can be used in optimizing production services, content writing, providing personalized recommendations to people by analyzing their online consumption patterns, planning inventory and handling logistics, etc. Today, AI has become so advanced that it can perform several human tasks.

There have been recent advances in the field of machine learning and machine translation. These have been combined with speech recognition and the development of smartphone applications that help people translate words and sentences on the go. There has been immense growth in the world of translation. Language gaps can now be bridged easily and effectively, just with the use of a simple cell phone.

AI translators are a digital technology that makes use of powerful artificial intelligence to translate letters that are typed and spoken. Sometimes it even translates the context within which the content is created. It is more accurate than basic machine translation.

Latest Blogs

Learn how to rank on AI search engines like ChatGPT, Perplexity, and Gemini by optimizing your content for authority, structure, and relevance. Stay ahead in AI-driven search with this strategic guide.

Explore the best healthcare SEO services for your medical practice. Improve online visibility and effectively reach more patients in need of your services.

Discover top social media agencies specializing in banking solutions, enhancing financial services and driving engagement.

Get your hands on the latest news!

Similar Posts

Language

6 mins read

A Guide on How to Do Language Translation

Language

5 mins read

A Complete Guide to Advertisement Translation

Language

6 mins read