Ethical & Responsible Use Of AI: An Unwritten Pact With Users

AI has brought unprecedented opportunities to businesses. However, along with opportunities comes incredible responsibility. AI, as a technology, has transformed the way we communicate, work, and create. As it has a direct effect on people’s lives it is essential to have a defined set of guidelines around data governance, AI ethics, trust, and legality.

What is Responsible AI?

Responsible AI is the system that enables the design, development, and deployment of AI in a way that is ethically and socially trustworthy.

Google and Microsoft are brands worth mentioning when considering responsible AI examples. These two organizations have created a set of values that everybody in their in-house teams interacting with or using AI is expected to follow at work for responsible use of AI.

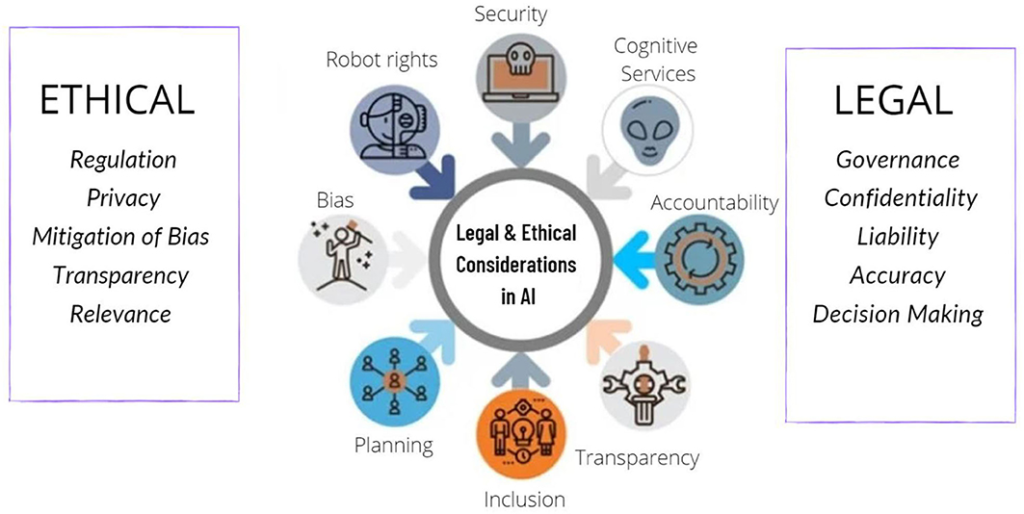

What is Ethical AI?

Ethical AI upholds moral values and enables accountability. Responsible use of AI facilitates ethical AI systems and solutions from design to deployment. Ethical AI can be customized, but the core criteria must include soundness, transparency, fairness, accountability, privacy, robustness, and sustainability.

In the past, the world has witnessed several cases of breaches of AI ethics examples. The app Lensa AI, sourced billions of photographs from the internet to generate cartoon-looking profiles without consent. Another breach of AI ethics example is people are using ChatGPT to write essays or win coding contests. However, as AI has grown, AI ethics has become even more crucial for the responsible use of AI and for mitigating risks.

Principles for Ethical and Responsible Use of AI

AI has incredible potential to benefit all future generations. However, advancements in technology also raise multiple challenges. Every AI system should be regulated by Code of Ethics principles which in turn guide the responsible use of AI. The below-mentioned principles are a set of guidelines to develop technology for the responsible use of AI.

1. Create awareness about AI

First and foremost, it is important to communicate clearly the benefits and challenges of AI. Just as AI can be a great tool in global digitalization and development, it can also be used for the wrong reasons. Thus, it is critical to keep AI within the ethical boundaries. Organizations should understand how AI algorithms are used in making decisions and should be able to communicate the same. Everyone, the employees as well as users, need to understand the repercussions of unethical AI practices.

2. Be transparent

Every brand needs to be transparent and honest about how AI is being used by them. Brands need to be transparent with their customers about the data they collect, how the data is supposed to be used, and how it will benefit customers. It is important for customers to trust the organizations and the work they do.

You can make AI transparent documenting the system’s behavior during stages of development and testing and communicating to end users how a model works. People should be made aware of the kind of AI system being used, the purpose, factors that affect the final outcome, and finally how the mistakes, if any, can be corrected.

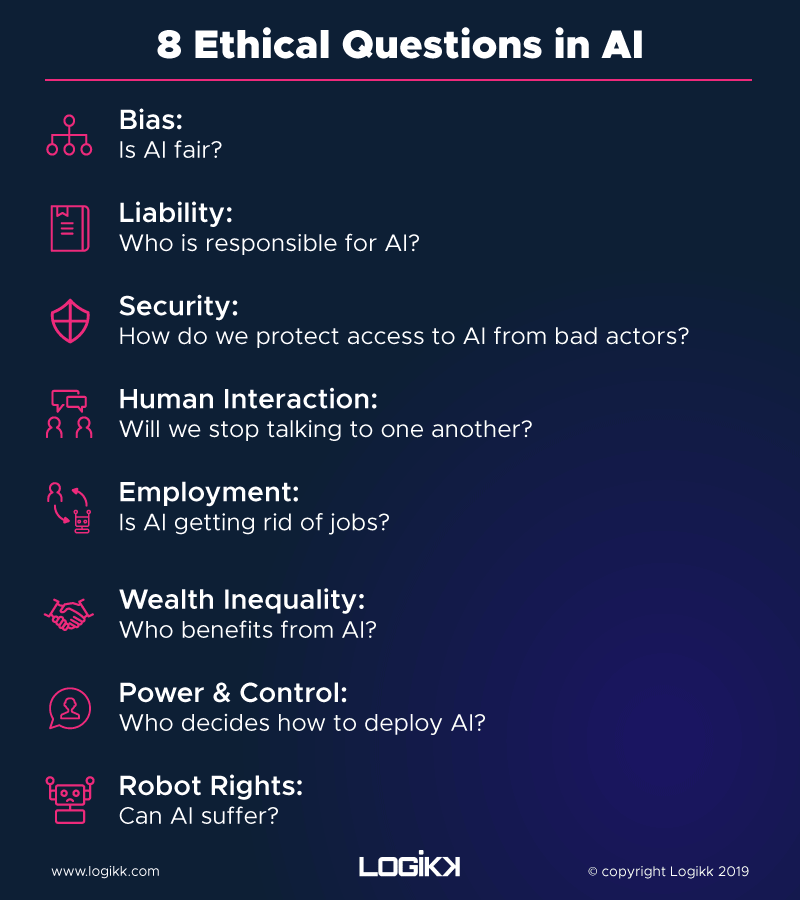

3. Control bias

Using unbiased data for training AI is considered responsible use of AI. Creating unbiased data sets is a way to address gender, racial and other biases in the world. Unlike humans, computer systems should be unbiased when making decisions. However, AI learns from real-world data which can be biased, thus leading to unfair decisions. This principle ensures that AI systems do not harm customers by inequitable treatment.

Currently, there are more white males working on AI. The focus should be on getting more diverse people to build AI systems so that it truly represents society as a whole. Google’s, ImageNet is an example of AI ethics not being followed. As the data set included more white faces than non-white faces, thus AI system worked better on white faces. Another example of AI ethics not being held is in 2019 when Apple launched its credit cards for people to apply online, men were given much higher credit limits than women.

4. Follow the rules

Always adhere to the set rules when it comes to the responsible use of AI. Even though you might have seen regulation of AI increasing in Europe and the US, there are still many unregulated parts. Many organizations have started using their own self-regulation in addition to the set rules. Organizations also go to the ethics council for AI which addresses ethical concerns. AI should be built in a way that respects human rights, laws, diversity, and democratic values. Risks linked to AI must be continuously monitored and managed by organizations developing AI.

5. Privacy and security

Every AI system should be designed to resist attacks and protect users’ private information. As AI learns from previous training data, which in some cases like the healthcare industry, can be quite sensitive, privacy and security should be the key focus.

It is mandatory for AI systems to comply with laws of the regulatory bodies and privacy laws related to data collection, storage, and processing. To ensure the responsible use of AI, organizations should implement access control restrictions and develop data management strategies to handle data responsibly.

6. Accountability

Organizations involved in building AI systems should also be accountable for the ethical implications of their product. There should be clear roles and responsibilities which mention accountability and meets the organization’s compliance.

Most AI systems are part of a complex supply chain involving data providers, technology providers, data labelers, and systems integrators. Thus, the degree of accountability should be clearly defined.

Implementing Ethical AI

AI, without fail, must be designed, developed, and deployed ethically. Following the step below you can build operational, customized, scalable, and sustainable AI systems.

1. Ethics council

A committee or a governance board should be set up to take care of privacy, fairness, and other data-related risks. Apart from employees, external subject matter experts should be a part of the committee.

2. Ethical AI framework

Creating an ethical AI risk framework is one of the best approaches to controlling ethical issues. This framework identifies the ethical standards as per regulations set and ensures the system adheres to the same. The framework must meet the quality assurance guidelines while designing and developing ethical AI systems.

3. Tools and enablers

Organizations must focus on developing tools and techniques that can be integrated into AI systems and platforms to support the responsible use of AI. AI systems should offer an explanation as to how they reached a decision, especially critical decisions. Certain tools are designed to evaluate the accuracy and reasoning for AI’s decision-making.

4. Awareness

An ethical culture in an organization can keep track of the system at every stage of development. One should elevate the responsible use of AI as a key business imperative. Also, it should be mandatory to train employees on the understanding of ethical AI principles

With the advancement in technology come opportunities as well as challenges. Ethical and responsible use of AI is and will always continue to be a key focus area in the advancement of technology. Today, with AI playing a critical role in almost every industry, enabling digital strategies and transformations, it is essential to specify clear ethical principles that lead to the responsible use of AI.

Research and development should aim at creating standardized guidelines for responsible use of AI which should be implemented by organizations using AI. To prevent potential misuse of AI, companies must follow ethical guidelines and regulate their AI programming. and in advance. Only by a setting clear governance framework can organizations implement responsible use of AI.

FAQs

AI, if left ungoverned, has the potential to put customer safety and privacy at risk. It weakens the trust in technology. The consequence of using AI unethically will impact not only the business but its customers and employees as well.

Ethical AI is one that respects human dignity, freedom, and rights. Businesses that want to offer responsible use of AI should comply with the principles of ethics in AI that protect privacy, civil liberties, and civil rights.

There are 11 clusters of principles, in the ethics guidelines for AI. These include transparency, non-maleficence, justice and fairness, responsibility, freedom and autonomy, privacy, beneficence, trust, sustainability, solidarity, and dignity.

A code of ethics is crucial to promote an environment of trust, integrity, ethical behavior, and excellence.

Latest Blogs

Explore the best healthcare SEO services for your medical practice. Improve online visibility and effectively reach more patients in need of your services.

Discover top social media agencies specializing in banking solutions, enhancing financial services and driving engagement.

Explore top B2B content marketing agencies of 2024. Engage decision-makers with impactful, high-quality content strategies.