Table of Contents

- What is Natural Language Processing?

- The Evolution of Natural Language Processing

- Importance of Natural Language Processing

Natural language processing or NLP is a subfield of natural languages and computer science that studies the interactions between human language and computer systems. The field is also known as computational linguistics and artificial intelligence in the linguistic domain.

NLP primarily relates to applications of natural language processing in languages like English or French primarily for use by humans. But with NLP evolution, there are new potential applications for natural language processing in fields such as law enforcement analysis with criminal profiles, medical diagnosis, and treatment with personalized medicine dashboards.

It’s not just an academic theory anymore – it’s everywhere. It’s become ubiquitous enough in our entertainment, education systems, and many other areas where we use technology daily. This article will explore the evolution of NLP from the 1940s until today.

What is Natural Language Processing?

Human language is a very complex and unique ability that only humans possess. There are thousands of human languages with millions of words in our vocabularies, where several words have multiple meanings, which further complicates matters.

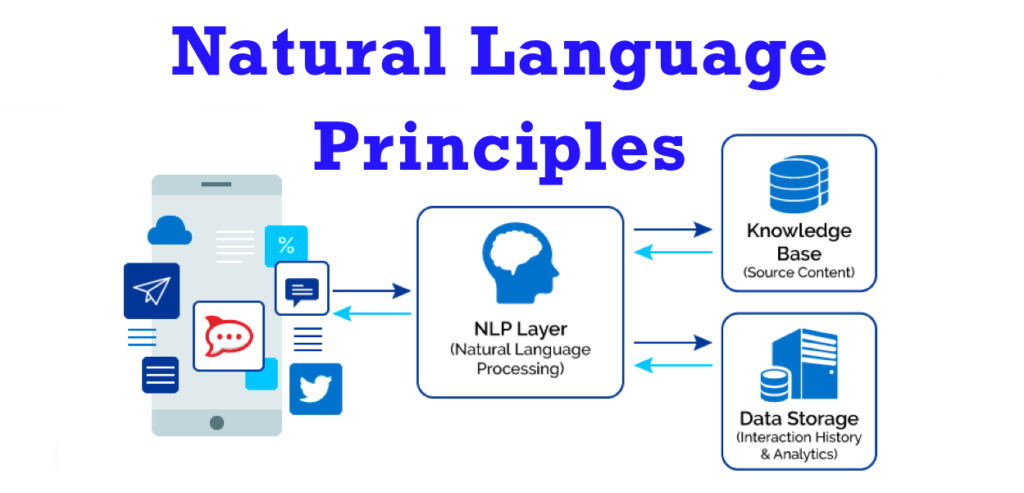

Computers can perform several high-level tasks, but the one thing they have lacked is the ability to communicate like human beings. NLP is an interdisciplinary field of artificial intelligence and linguistics that bridges this gap between computers and natural languages.

There are infinite possibilities for arranging words in a sentence. It is essentially impossible to form a database of all sentences from a language and feed it to computers. Even if possible, computers could not understand or process how we speak or write; language is unstructured to machines.

Therefore, it is essential to convert sentences into a structured form understandable by computers. Often there are words with multiple meanings (a dictionary is not sufficient to resolve this ambiguity, so computers also need to learn grammar), and pronunciations of words also differ based on regions.

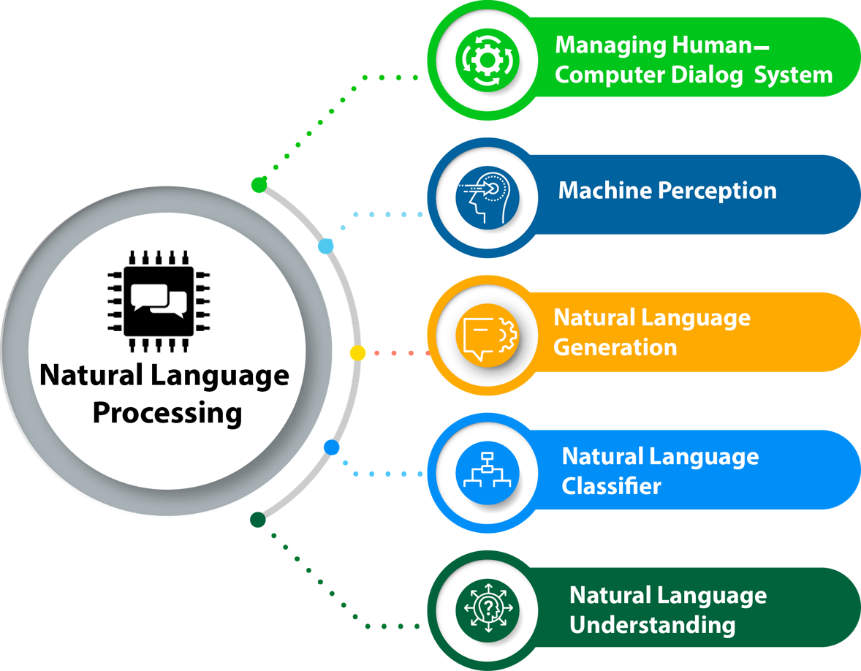

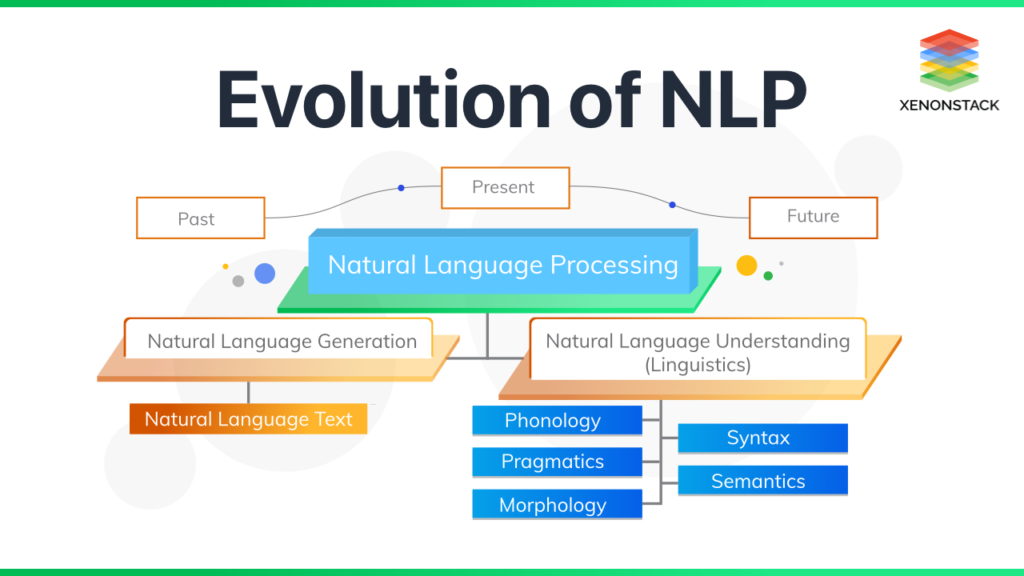

NLP’s function is to translate structured and unstructured text; thus helping machines understand human language. When you go from the unstructured to structured form (transforming natural language into informative representation), it is called natural language understanding (NLU).

It is known as natural language generation (NLG) when you go from structured to unstructured (producing meaningful phrases from internal representation).

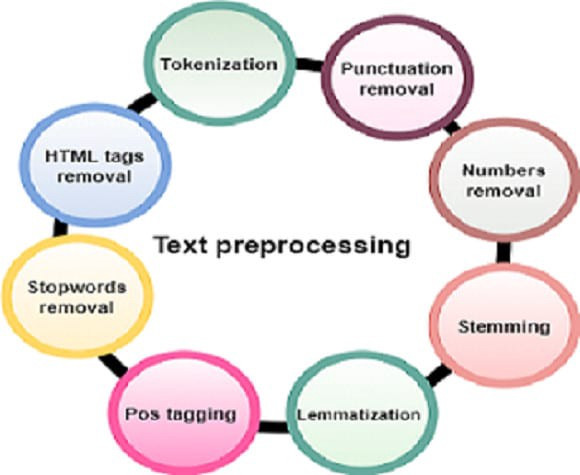

- Stages of NLP

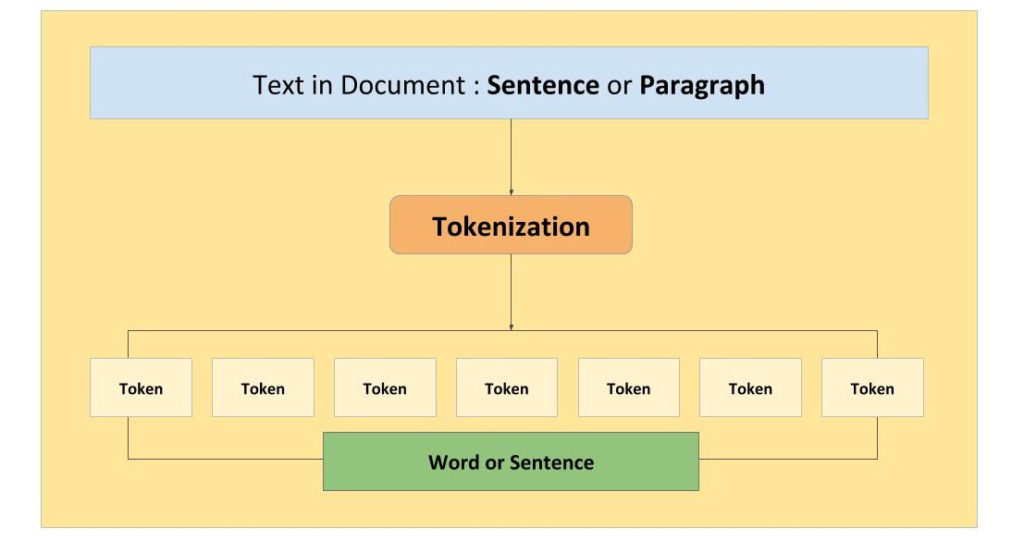

- The first stage is called tokenization. A string of words or sentences is broken down into components or tokens. This retains the essence of each word in the text.

- The next step is stemming, where the affixes are removed from the words to derive the stem. For example, “runs,” “ran,” and “running” all have the same stem, “run.”

- Lemmatization is the next stage. The algorithm looks for the meaning of a word in a dictionary, and its root word is determined to derive its significance in the relevant context. For example, the root of better is not “bet” but good.

- Several words have multiple meanings, which depend on the context of the text. For instance, in the phrase “give me a call,” “call” is a noun. But in “call the doctor,” “call” is a verb. In this stage, NLP analyzes the position and context of the token to derive the correct meaning of the words, which is called parts of speech tagging.

- The next stage is known as “named entity recognition.” In this stage, the algorithm analyzes the entity associated with a token. For example, the token “London” is associated with location, and “Google” is associated with an organization.

- Chunking is the final stage of natural language processing, which picks individual pieces of information and groups them into more significant parts.

All these functions run on NLTK, a tool designed by Python. All NLP processes and text analysis use this natural language toolkit library.

The Evolution of Natural Language Processing

- History of NLP

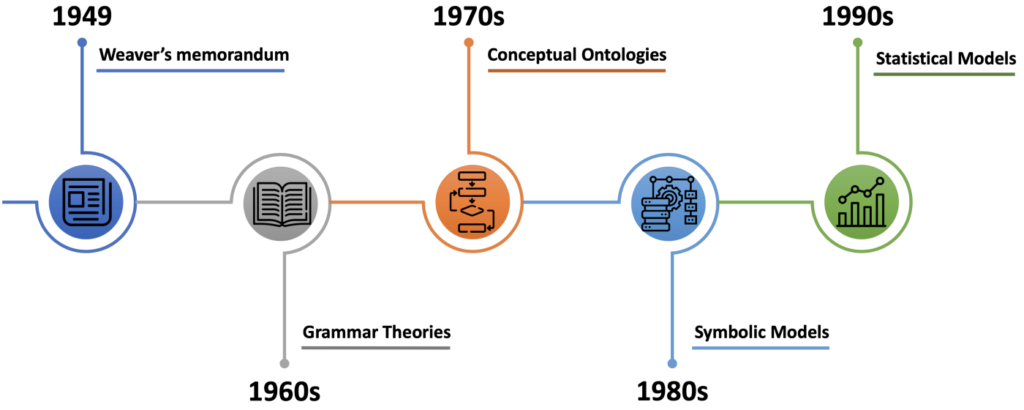

The evolution of NLP is an ongoing process. The earliest work of NLP started as machine translation, which was simplistic in approach. The idea was to convert one human language into another, and it began with converting Russian into English. This led to converting human language into computer language and vice versa.

In 1952, Bell Labs created Audrey, the first speech recognition system. It could recognize all ten numerical digits. However, it was abandoned because it was faster to input telephone numbers with a finger. In 1962 IBM demonstrated a shoebox-sized machine capable of recognizing 16 words.

DARPA developed Harpy at Carnegie Mellon University in 1971. It was the first system to recognize over a thousand words. The evolution of natural language processing gained momentum in the 1980s when real-time speech recognition became possible due to advancements in computing performances.

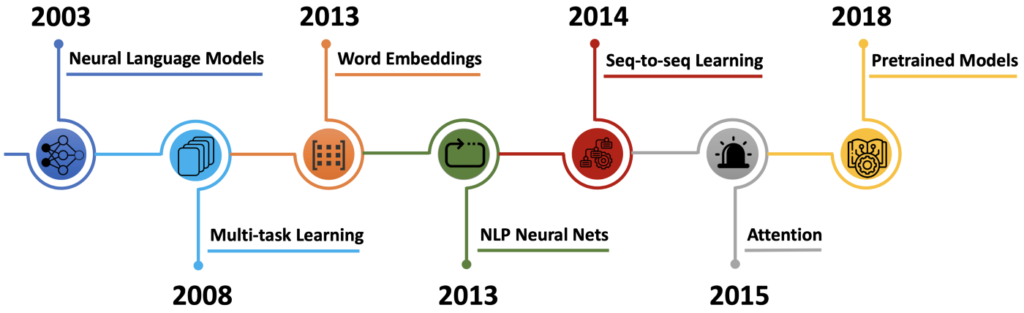

There was also innovation in algorithms for processing human languages, which discarded rigid rules and moved to machine learning techniques that could learn from existing data of natural languages.

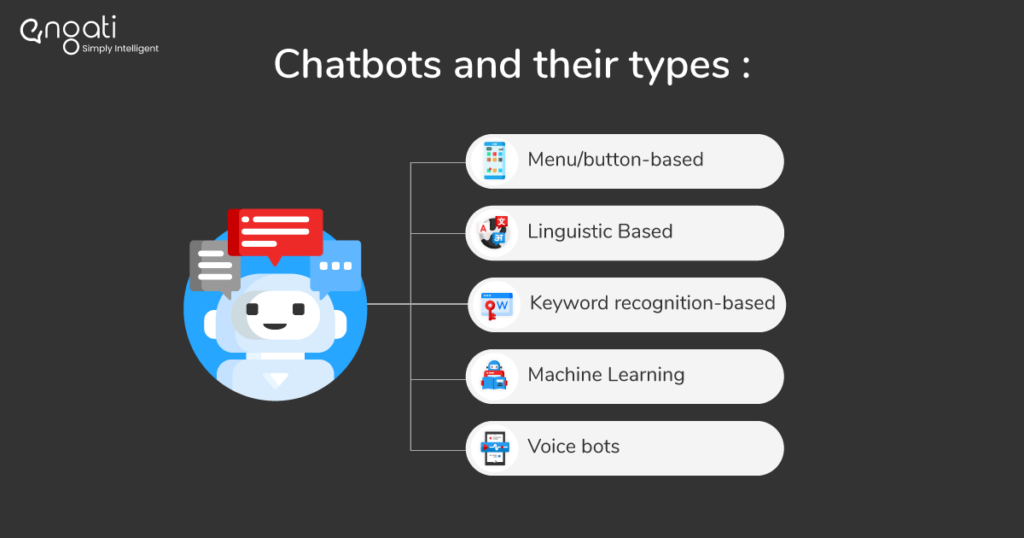

Earlier, chatbots were rule-based, where experts would encode rules mapping what a user might say and what an appropriate reply should. However, this was a tedious process and yielded limited possibilities.

An early example of rule-based NLP was Eliza, created by MIT in 1960. Eliza used synthetic rules to identify the meaning in the written text, which it would turn around and ask the user about.

Of course, NLP evolution has taken place in the last fifty years. The branches of computational grammar and statistics gave NLP a different direction, giving rise to statistical language processing and information extraction fields.

- Current trends in NLP

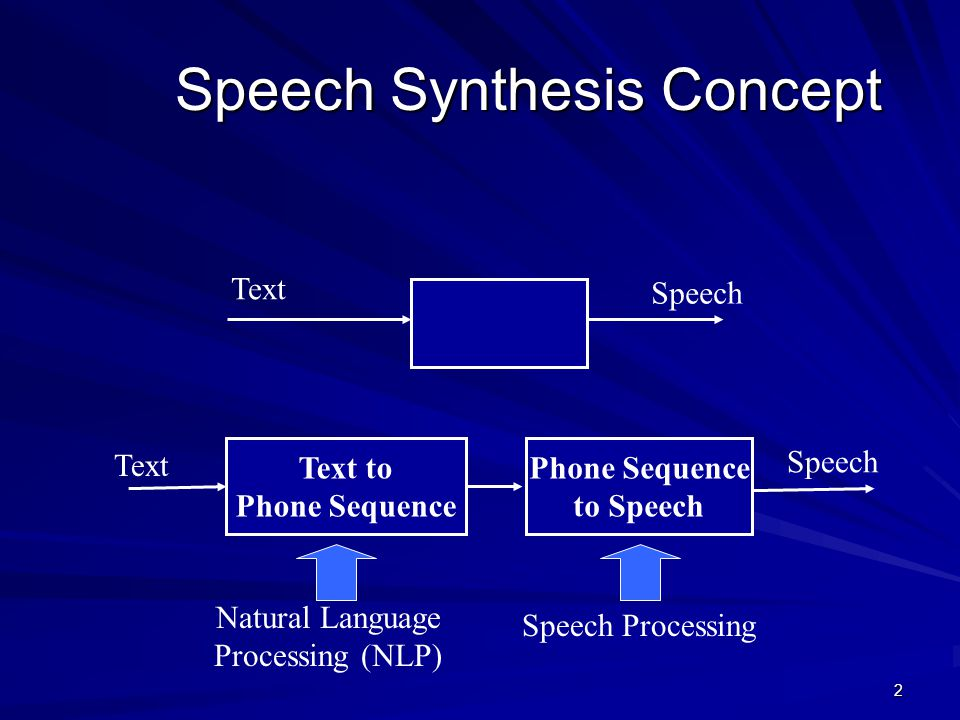

With the evolution of NLP, speech recognition systems are using deep neural networks. Different vowels or sounds have different frequencies, which are discernible on a spectrogram.

This allows computers to recognize spoken vowels and words. Each sound is called a phoneme, and speech recognition software knows what these phonemes look like. Along with analyzing different words, NLP helps discern where sentences begin and end. And ultimately, speech is converted to text.

Speech synthesis gives computers the ability to output speech. However, these sounds are discontinuous and seem robotic. While this was very prominent in the hand-operated machine from Bell Labs, today’s computer voices like Siri and Alexa have improved.

We are now seeing an explosion of voice interfaces on phones and cars. This creates a positive feedback loop with people using voice interaction more often, which gives companies more data to work on.

This enables better accuracy, leading to people using voice more, and the loop continues.

NLP evolution has happened by leaps and bounds in the last decade. NLP integrated with deep learning and machine learning has enabled chatbots and virtual assistants to carry out complicated interactions.

Chatbots now operate beyond the domain of customer interactions. They can handle human resources and healthcare, too. NLP in healthcare can monitor treatments and analyze reports and health records. Cognitive analytics and NLP are combined to automate routine tasks.

- Various NLP Algorithms

The evolution of NLP has happened with time and advancements in language technology. Data scientists developed some powerful algorithms along the way; some of them are as follows:

- Bag of words: This model counts the frequency of each unique word in an article. This is done to train machines to understand the similarity of words. However, millions of individual words are in millions of documents; hence, maintaining such vast data is practically unimaginable.

- TF-IDF: TF (term frequency) is calculated as the number of times a certain term appears out of the number of terms present in the document. This system also eliminates “stop words,” like “is,” “a,” “the,” etc.

- Co-occurrence matrix: This model was developed since the previous models could not solve the problem of semantic ambiguity. It tracked the context of the text but required a lot of memory to store all the data.

- Transformer models: This is the encoder and decoder model that uses attention to train the machines that imitate human attention faster. BERT, developed by Google based on this model, has been phenomenal in revolutionizing NLP.

Carnegie Mellon University and Google have developed XLNet, another attention network-based model that has supposedly outperformed BERT in 20 tasks. BERT has exponentially improved search results on browsers. Megatron and GPT-3 are based on this architecture used in speech synthesis and image processing.

In this encoder-decoder model, the encoder tells the machine what it should think and remember from the text. The decoder uses those thoughts to decide the appropriate reply and action.

For example, in the sentence “I would like some strawberry___.” The ideal words for this blank would be “cake” or “milkshake.” In this sentence, the encoder focuses on the word strawberry, and the decoder pulls the right word from a cluster of terms related to strawberry.

- Future Predictions of NLP

NLP evolves every minute as more and more unstructured data is accumulated. So, there is no end to the evolution of natural language processing.

- As more and more data is generated, NLP will take over to analyze, comprehend, and store the data. This will help digital marketers analyze gigabytes of data in minutes and strategize marketing policies accordingly.

- NLP concerns itself with human language. However, NLP evolution will eventually bring into its domain non-verbal communications, like body language, gestures, and facial expressions.

To analyze non-verbal communications, NLP must be able to use biometrics like facial recognition and retina scanner. Just as NLP is adept at understanding sentiments behind sentences, it will eventually be able to read the feelings behind expressions. If this integration between biometrics and NLP happens, the interaction between humans and computers will take on a whole new meaning.

- The next massive step in AI is the creation of humanoid robotics by integrating NLP with biometrics. Through robots, computer-human interaction will move into computer-human communication. Visual assistants do not even begin to cover the scope of NLP in the future. When coupled with advancements in biometrics, NLP evolution can create robots who can see, touch, hear, and speak, much like humans.

- NLP will shape the communication technologies of the future.

Importance of Natural Language Processing

NLP solves the root problem of machines not understanding human language. With its evolution, NLP has surpassed traditional applications, and AI is being used to replace human resources in several domains.

Let’s look at the importance of NLP in today’s digital world:

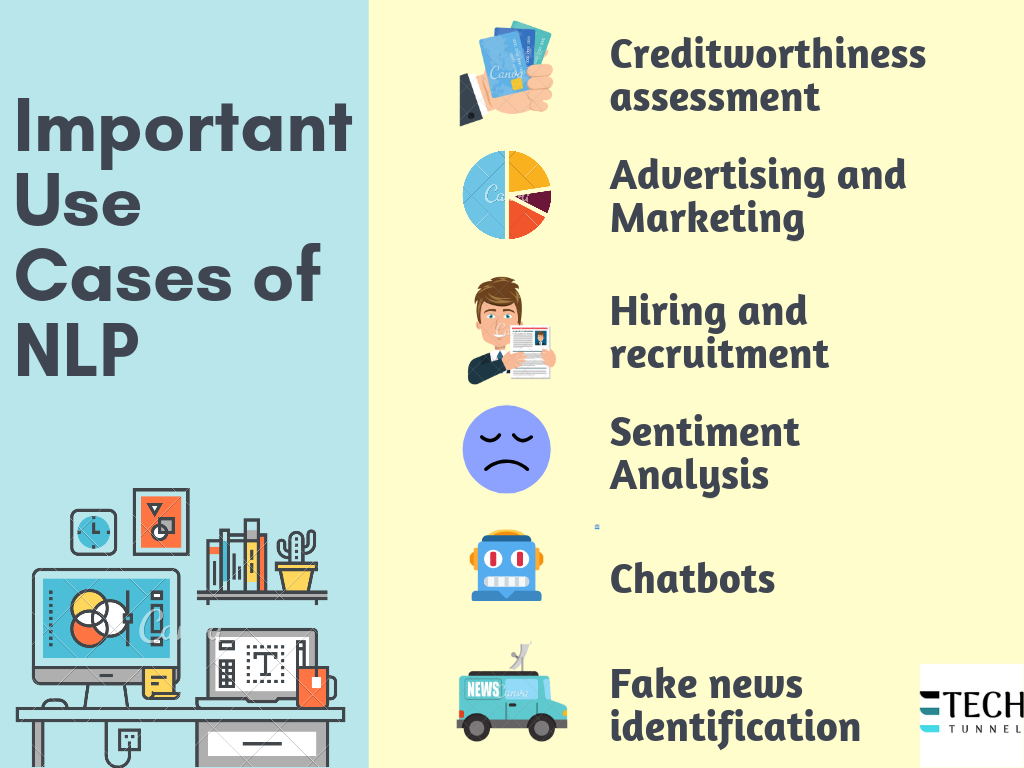

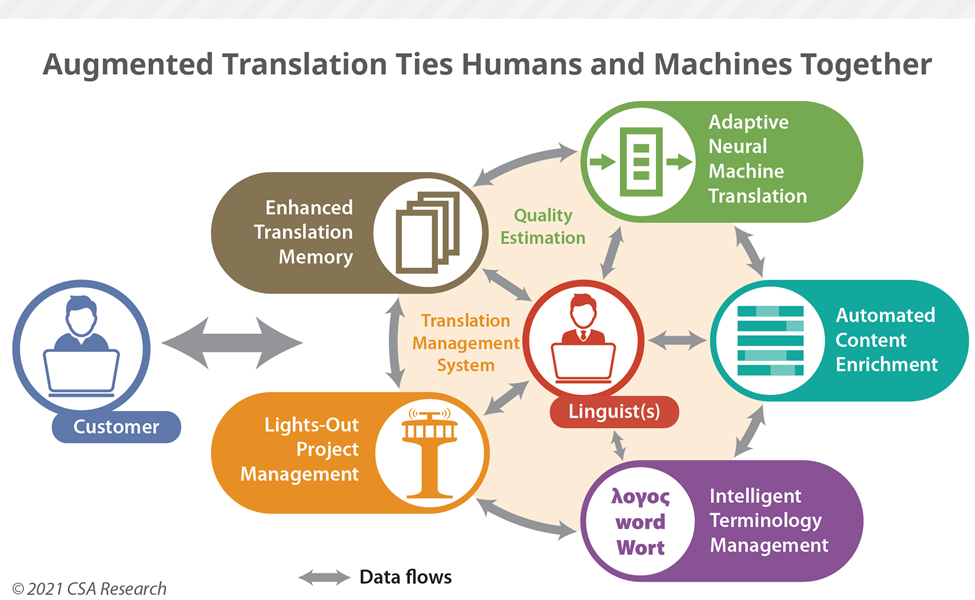

- “Machine translation” is a significant application of NLP. NLP is behind the widely used Google Translate, which converts one language into another in real-time. It assists computers in understanding the context of sentences and the meaning of words.

- Virtual assistants like Cortana, Siri, and Alexa are boons of NLP evolution. These assistants comprehend what you say, give befitting replies, or take appropriate actions, and do all this through NLP.

- Intelligent chatbots are taking the world of customer service by storm. They are replacing human assistance and conversing with customers just like humans do. They interpret the written text, and it decides on actions accordingly. NLP is the working mechanism behind such chatbots.

- NLP also helps in sentiment analysis. It recognizes the sentiment behind posts. For instance, it determines whether a review is positive, negative, serious or sarcastic. NLP mechanisms help companies like Twitter remove tweets with foul language, etc.

- NLP automatically sorts our emails into social, promotions, inbox, and spam categories. This NLP task is known as text classification.

- Other importances of NLP are seen in checking spellings, keyword research, and extracting information. Plagiarism checkers also run on NLP programs.

- NLP also drives advertisement recommendations. It matches advertisements with our history.

- NLP helps machines understand natural languages and perform language-related tasks. It makes it possible for computers to analyze more language-based data than humans.

It is impossible to comprehend these staggering volumes of unstructured data available by conventional means. This is where NLP comes in. The evolution of NLP has enabled machines to structure and analyze text data tirelessly.

- A language has millions of words, several dialects, and thousands of grammatical and structural rules. It is essential to comprehend human text’s synthetic and semantic context, which is not possible by computers.

NLP is vital in this light as it helps to resolve any ambiguity related to natural languages and adds valuable numeric structure to the information that machines can process. Some examples are speech recognition and text analytics.

Conclusion

Thus, NLP treats natural languages like Lego and makes computers adept at understanding and processing human languages. This enables machines to answer questions and obey commands.

Virtual assistants are the most exemplary contribution to natural language processing and benchmark how far NLP evolution has come. By studying the evolution of NLP, data scientists can predict what form this fascinating branch of IA will take in the future.

One can safely conclude that speech technologies will become a popular form of interaction with computers, just like the keyboards, screens, and other input-output devices that we use today.

FAQs

The evolution of NLP is happening at this very moment. NLP evolves with every tweet, voice search, email, WhatsApp message, etc. MarketsandMarkets has established that NLP will grow at a CAGR of 20.3% by 2026. According to Statistica, the NLP market will bloom 14 times between 2017 and 2025.

The ambiguities of language like semantic, syntactic, and pragmatic are the biggest challenges that have to be overcome by NLP for the accurate processing of natural languages.

NLP is a fascinating and booming subfield of data science. It is changing how we interact with machines and give speech technologies differently.

NLP has two subfields—natural language understanding (NLU) and natural language generation (NLG).

Data scientists have developed NLP to allow machines to interpret and process human languages. With the evolution of NLP, it can now interact with humans, too. Siri and Alexa are some examples of the latest applications of NLP.

Latest Blogs

Learn how to rank on AI search engines like ChatGPT, Perplexity, and Gemini by optimizing your content for authority, structure, and relevance. Stay ahead in AI-driven search with this strategic guide.

Explore the best healthcare SEO services for your medical practice. Improve online visibility and effectively reach more patients in need of your services.

Discover top social media agencies specializing in banking solutions, enhancing financial services and driving engagement.

Get your hands on the latest news!

Similar Posts

Translation

5 mins read

All You Need to Know About Language Translation and Terminology Management

Translation

5 mins read

6 Reasons to Translate Content into German

Translation

5 mins read